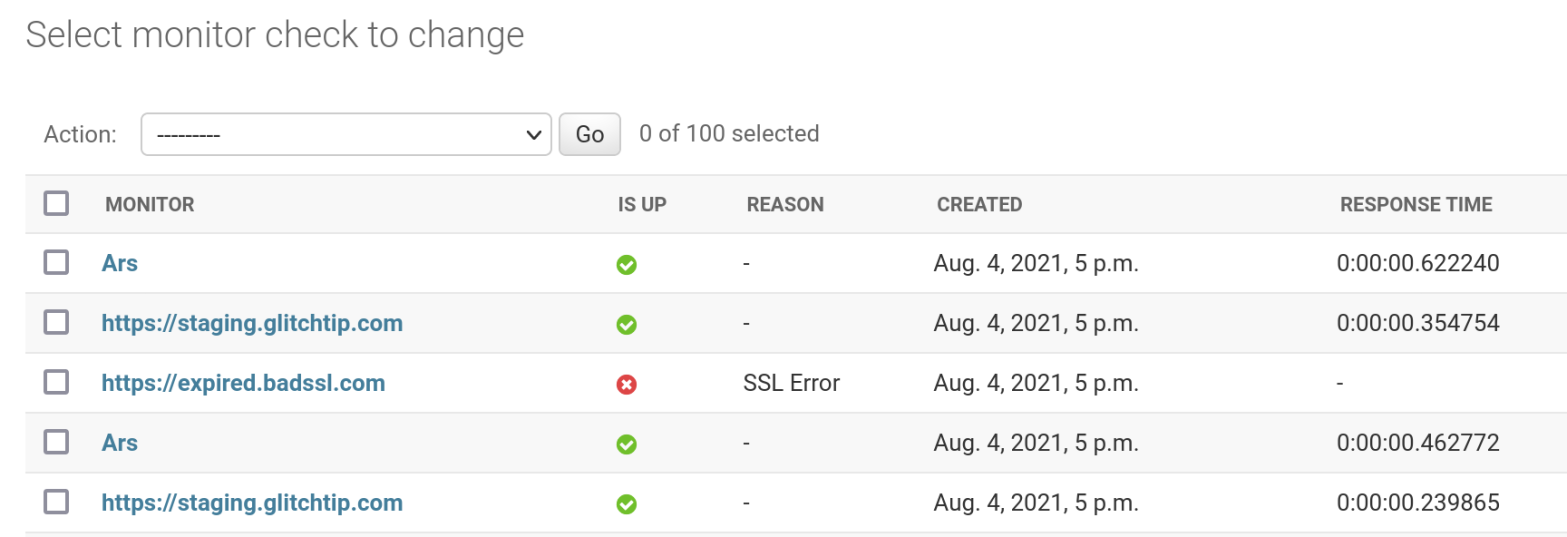

My motivation – I wanted to make a network monitoring service in Python. Python isn’t known for it’s async ability, but with asyncio it’s possible. I wanted to include it in a larger Django app, GlitchTip. Keeping everything as a monolithic code base makes it easier to maintain and deploy. Go and Node handle concurrent… Continue reading Monitor network endpoints with Python asyncio and aiohttp

Author: David

I am a supporter of free software and run Burke Software and Consulting LLC.

I am always looking for contract work especially for non-profits and open source projects.

Open Source Contributions

I maintain a number of Django related projects including GlitchTip, Passit, and django-report-builder. You can view my work on gitlab.

Academic papers

Incorporating Gaming in Software Engineering Projects: Case of RMU Monopoly in the Journal of Systemics, Cybernetics and Informatics (2008)

Deploy Saleor E-commerce with Kubernetes and Helm

Saleor is a headless, Django based e-commerce framework. This post will show how to deploy Saleor using Django Helm Chart. It will focus on deploying the Django Backend. The dashboard is a static HTML site and is left out. The front-end is something you should build yourself. First you need a Docker image. You can… Continue reading Deploy Saleor E-commerce with Kubernetes and Helm

Deploy Django with Helm

This is a follow up post to Deploy Django with helm to Kubernetes which focused on the CI pipeline. This post will highlight a generic Django Helm Chart I made for GlitchTip, an open source alternative to Sentry issue tracking. Note that Kubernetes and Helm are very complex systems that a simple Django app does… Continue reading Deploy Django with Helm

Wagtail Single Page App Integration news

wagtail-spa-integration is a Python package I started with coworkers at thelab. Version 2.0 Wagtail SPA Integration 2.0 is released! The release is actually maintenance only, but now requires Wagtail 1.8+ thus making it a potentially breaking change. Coming soon version 3.0 with wagtail-headless-preview A major feature of Wagtail SPA Integration is preview support. Torchbox (the… Continue reading Wagtail Single Page App Integration news

Deploy Django with helm to Kubernetes

This guide attempts to document how to deploy a Django application with Kubernetes while using continuous integration It assumes basic knowledge of Docker and running Kubernetes and will instead focus on using helm with CI. Goals: Must be entirely automated and deploy on git pushes Must run database migrations once and only once per deploy… Continue reading Deploy Django with helm to Kubernetes

Django Rest Framework ModelViewSets with natural key lookup

DRF ModelViewSet can easily support detail views by slug via the lookup_value attribute. But what if you had compound keys (aka natural keys)? For example a url structure like /api/computers/<organization-slug>/<computer-slug>/ A computer slug may only be unique per organization. That means different organizations may have computers with the same slug. But no computer may have… Continue reading Django Rest Framework ModelViewSets with natural key lookup

Angular Wagtail 1.0 and getting started

Angular Wagtail and Wagtail Single Page App Integration are officially 1.0 and stable. It’s time for a more complete getting started guide. Let’s build a new app together. Our goal will be to make a multi-site enabled Wagtail CMS with a separate Angular front-end. When done, we’ll be set up for features such as Map… Continue reading Angular Wagtail 1.0 and getting started

Talk: Integrating Angular with “Headless” Wagtail CMS

I gave a talk at the Django NYC meetup. Here is a link. I spoke about Wagtail SPA Integration and Angular Wagtail. I am hoping to call both projects 1.0 in September. Please give them and test and report bugs!

Controlling a ceiling fan with Simple Fan Control

I released Simple Fan Control today on Google Play, web, and source on Gitlab. This project’s genesis was the purchase of a Hunter Advocate fan with Internet connectivity. It’s app doesn’t work, which I wrote about recently. The app is build with NativeScript and works by interacting with Alya Network’s Internet of Things (IoT) service.… Continue reading Controlling a ceiling fan with Simple Fan Control

How to set up a Hunter fan Wifi control by decompiling the app

Update I made an open source web/android app that can do this for you. Note you’ll still need the SimpleConnect app to connect the fan to wifi. I got a hunter fan recently that was supposed to be controllable via an app called SimpleConnect. Looking at the reviews, it doesn’t work. It gets stuck on… Continue reading How to set up a Hunter fan Wifi control by decompiling the app